Telegram, the famed advocate of free speech in content moderation, is now compelled to revise its policy on private conversations.

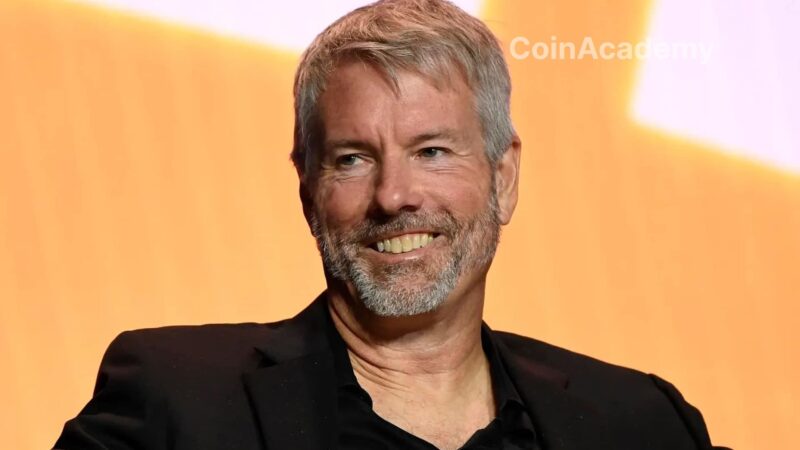

This significant change follows the arrest of Pavel Durov, the founder and CEO of Telegram, in France for his alleged failure to regulate illegal content on the platform.

A Subtle Revision of Moderation Policy

On Thursday evening, Telegram reportedly implemented a change through an update to its FAQ page. Private chat users can now report potentially illegal content for review by the application’s moderators. Previously, private conversations were considered beyond the reach of external intervention. This change marks a significant shift in the messaging platform’s management, which was known for offering its users almost complete freedom.

Durov, in a statement on Telegram, acknowledged that the platform’s rapid growth had facilitated its misuse by malicious individuals. This realization prompted him to consider substantial modifications to better control abuses on the application.

Context: a Controversial Arrest in France

Last month, Pavel Durov was arrested in France, accused by authorities of inadequate monitoring and suppression of criminal activities taking place on Telegram. French authorities, concerned about the proliferation of illegal content on the platform, reproached Durov for tolerating exchanges related to illicit activities. Although Durov categorically denied these accusations, the authorities demanded that he remain in France until his trial.

This arrest sparked a heated controversy surrounding the responsibility of digital platforms in monitoring private and public exchanges. Until this case, Telegram had taken a stance of non-interference, granting its users significant leeway.

Telegram’s Clarifications

In response to the arrest and accusations, Telegram issued a statement clarifying that the reporting policy for illegal content was not new. The platform asserts that it has always been possible to report messages, even within private groups, through options such as blocking or reporting. However, the recent FAQ revision aims to provide clarity on these procedures by incorporating mentions of the Digital Services Act (DSA), a European regulation on content moderation.

Despite this change, Telegram emphasizes that private conversations remain confidential unless a user takes the initiative to report suspicious exchanges. The initial phrase stating that Telegram would not cooperate in transmitting information related to illegal activities has also been moved to the bottom of the page, under the section dedicated to copyright.

Implications for Telegram and its Users

This policy reversal could redefine the public perception of Telegram, which was once seen as a bastion of unrestricted freedom of expression. The choice to extend the reach of moderators to private chats is a Telegram’s attempt to address concerns from European regulators while maintaining its image as a privacy-focused messaging platform.

However, this measure raises questions about the balance between privacy and public safety. Though private chats are protected by default, the ability for users to report content suggests a more intrusive moderation. It remains to be seen how this new approach will impact Telegram’s user base, which has largely embraced the platform for its historical resistance to strict moderation.